These last few weeks have been the most demanding and challenging yet. I have had to put in a lot of hard work but haven't had much success or results to show for it. I spent most of these weeks working on the Boosting tracker that works on the online Adaboosting algorithm. It took quite a while to decide how the API should look and how the tracking framework should be designed so that it's kind of modular and all the following tracking algorithms can just be inserted into the right place without having to concern about the previous algorithms.

The Tracking API

A major component of these tracking algorithms is Active or Adaptive Appearance Modelling. It involves tracking targets while evolving the appearance model in order to adapt it to changing imaging conditions. I found this paper by S. Salti, A. Cavallaro and L. Di Stefano where they have described a united framework for trackers which use Adaptive Appearance Modelling. I have based the Tracking API partly on this paper.

The API mainly consists of different Tracker objects which contain some individual parameters along with a Tracker Model, a Tracker

Sampler, a Feature Set, and a State Estimator. Each tracker will have its own implementations of model, sampler, state estimator, etc. Apart from these

objects the Tracking API will have just two functions: init_tracker() and update_tracker().

The init_tracker() function takes three parameters as input: tracker::Tracker which is the tracker object,

image::Array{T,2} where T which the frame on which the tracker is to be initialized and bounding_box::MVector{4,Int}which

is the bounding box for the object to be tracked, here the first two values are y and x of the top left point and the next two correspond to the bottom

right point. The update_tracker() function takes two parameters: tracker::Tracker the tracker object and image::Array{T,2}

where T the frame for which the tracker is to be updated. This function returns the new location of the object as a bounding box.

Boosting Tracker

The first tracker that I have implemented is the Boosting Tracker. The main idea in this tracker is to formulate the tracking problem as a binary classification task and to achieve robustness by continuously updating the current classifier of the target object. This tracking algorithm is presented in this Real-Time Tracking via On-line Boosting by H. Grabner, M. Grabner and H. Bischof.

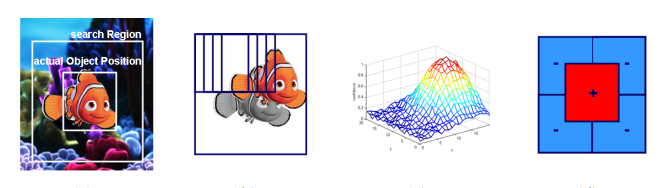

The four main steps of tracking by a classisifer.

The four main steps of tracking by a classisifer.

As the bounding box of the object for the first frame is available, this image region is assumed to be a positive image sample for the tracker. At the same time, negative examples are extracted by taking regions of the same size as the target window from the surrounding background. These samples are used to make several iterations of the online boosting algorithm in order to obtain a first model which is already stable. For the next frames, the current classifier is evaluated at a region of interest and we obtain for each position a confidence value. We analyze the confidence map and shift the target window to the new location of the maxima. Once the objects has been detected the classifier has to be updated in order to adjust to possible changes in appearance of the target object and to become discriminative to a different background. The current target region is used as a positive update of the classifier while again the surrounding regions represent the negative samples.

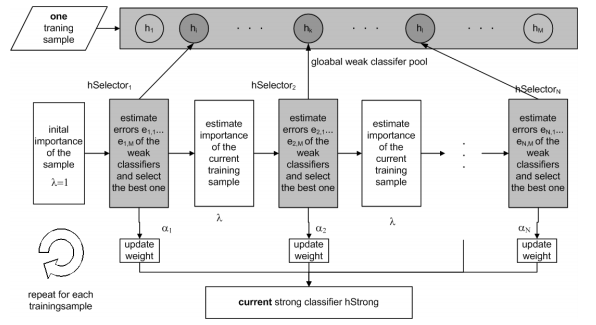

The working of the online boosting algorithm for feature selection.

The working of the online boosting algorithm for feature selection.

I spent the first one and half weeks writing the tracker features and state estimator for the boosting tracker. Then I spent a couple of days writing the tracker model and the tracker sampler algorithm. After this I started working on the online adaboosting algorithm. This took a major part of a week. Writing this much code spread over a span of over two and half weeks led to the expected problem that one part of my code wasn't working with the other. I might had made some assumptions during the state estimator which I forgot while working on the boosting algorithm. This led to a lot of time being spent on debugging hundreds of lines on code. Since I was falling behind on my timeline I started working on the MIL tracker parallely. I finally managed to get the boosting tracker working today and sent the PR #5.

MIL Tracker

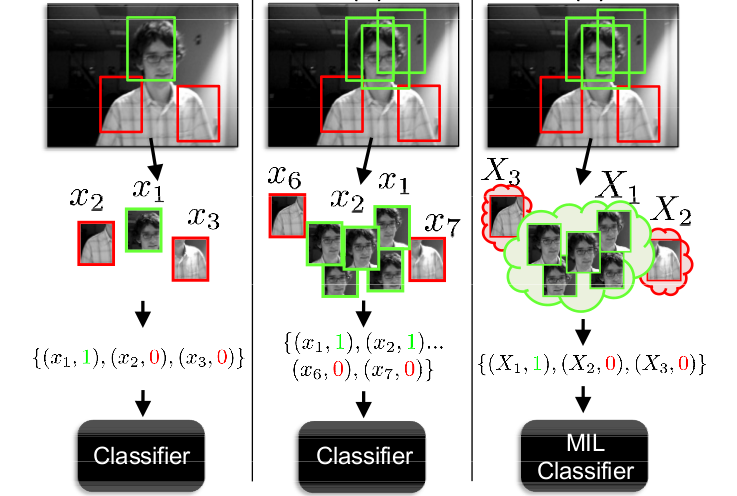

This tracker is based on the paper Visual tracking with online Multiple Instance Learning by B. Babenko, M. Yang and S. Belongie. It is similar in idea to the boosting tracker described above. The big difference is that instead of c onsidering only the current location of the object as a positive example, it looks in a small neighborhood around the current location to generate several potential positive examples.

This may seem like a bad idea because in most of these “positive” examples the object is not centered. This is where Multiple Instance Learning (MIL) comes to rescue. In MIL, we do not need to specify positive and negative examples, but positive and negative “bags”. The collection of images in the positive bag are not all positive examples. Instead, only one image in the positive bag needs to be a positive example! In our example, a positive bag contains the patch centered on the current location of the object and also patches in a small neighborhood around it. Even if the current location of the tracked object is not accurate, when samples from the neighborhood of the current location are put in the positive bag, there is a good chance that this bag contains at least one image in which the object is nicely centered.

Positive and Negative "bags" approach for sampling.

Positive and Negative "bags" approach for sampling.

I have completed the sampler algorithm for MIL and am currently working on the state estimator which should take only a couple of days. After that I will implement the tracker model and online MIL algorithm within this and move onto the the MedianFlow tracker from next week.